The Platform for GenAI Solutions

With Vertesia's Large Language Model (LLM) software platform, enterprise teams design, test, deploy, and operate LLM-powered tasks that automate and augment their business processes and applications with security, governance, and orchestration to drive efficiency, improve performance, and lower costs.

What is the Vertesia Platform?

Vertesia Platform is API-first LLM software platform with a composable architecture that enables enterprise organizations to focus on what they do best while helping accelerate and future-proof how they experiment, build, deploy, manage, and scale GenAI-augmented applications.

-

The Web UI

-

API & Integration Options

-

Cloud Deployment

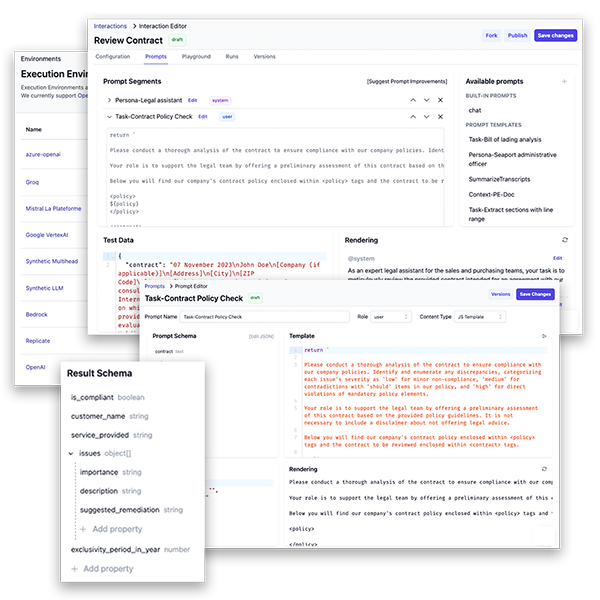

Studio

Studio is the web UI of Vertesia Platform. It enables enterprise teams to rapidly create, test, and deploy LLM tasks. Studio provides an easy and secure way to connect to all of the major AI providers through our open-source connectors. It also includes a prompt designer with prompt templates, an interaction composer where you define the tasks you want the LLM to perform, a playground to test, compare, and refine your prompts and models, the ability to fine-tune everything, monitoring and analytics to understand how your interactions and models perform, plus semantic RAG and workflow capabilities.

Multiple Integration Options

Vertesia is an API-first platform. Anything you can do in Studio, you can do via the API. We offer a REST API, OpenAPI/Swagger, JavaScript SDK, and CLI.

Multi-Cloud Deployment

Vertesia's multi-cloud SaaS is hosted on Google Cloud and AWS. Vertesia can also be deployed in any public or private cloud supporting container images and MongoDB.

AI/LLM Environments

Environments are where you connect to inference providers. Simply add your API key to connect to any of the major AI providers and access their LLM foundation models using our open-source connectors.

It's easy to assign different models to different tasks from any of the available inference providers at any time.

Virtualized LLM

Virtualized or Synthetic LLM is how we bring specialized models to the enterprise.

Virtualized LLMs can distribute tasks across multiple models to eliminate any one model as a single point of failure. Virtualized LLMs can also send tasks to multiple models in parallel as an approach to assess and select the best result, evaluate and gradually roll-out new models, or fine-tune lower cost models based on the results of better performing models.

Want to assign a task to Llama2 instead of GPT4 in 30% of cases? No problem.

Our Virtualized LLM capabilities enable:

-

Self-improvement

-

Specialization (& distillation)

-

Gradual roll-out of new models

-

Benchmarking

-

Evaluation

-

Model independence

Load Balancing

Distributed tasks on models, based on weights

Shadowing

Execute in shadow of main model for evaluation

Multi-Head

Several LLMs execute the task, an evaluator selects the best one to be served, label all

Self-Training

Automatically fine-tune less performing models with the results selected by LLM or human feedback

Self-Improvement

Iterate on Self-Training to have the model converge and specialize on the task

Prompt Designer

Prompt templates are the building blocks of prompts and are assembled in interactions to define a task. Select a prompt template from our library of examples, or create your own reusable prompt.

Reuse tested prompts and compose them to create more complex versions. In addition, prompts come with schemas in and out to strengthen quality — thanks to type safety.

Prompts are automatically converted to the target's model format without any change. We manage the syntax and transformation needed for each LLM.

Prompt Templates

Combine prompts with input schemas, variables, and test data to create a rendering of your prompt.

Prompt Rendering

Write your prompt once and let our platform manage the syntax and transformations optimized for running against any LLM.

Prompt Library

Share, reuse, and innovate with a library of prompt templates to accelerate the creation of new LLM tasks.

Interaction Composer

Interactions define the tasks that the LLM are requested to perform. Define your task and output schema, add your prompts segments, and pick your LLM. Even mediate or load balance between multiple LLMs.

Task Configuration

Give your task a name, choose an LLM or Virtualized LLM, and define the output of your task as basic text or a strongly typed schema.

Prompts Segments

Select from the available prompt templates to create the prompt segments, test data, and final prompt rendering that defines your interaction.

Prompt Assistance

Receive AI-driven recommendations for prompt designs, backed by custom training to refine LLM responses.

Playground & Fine-Tuning

Test, compare, and refine your prompts and LLMs. Publish your interaction when ready to deploy your AI/LLM task.

Playground

Run your interaction along with test data against any LLM and stream the result in real-time until the final output is displayed in a form or JSON format.

Publishing & Versioning

Publish your interaction with version control and an audit trail that keeps track of all history. Even fork an interaction to create something new.

Fine-Tuning

Fine-tune everything! Fine-tune your prompts, interactions, or LLM environments based on your runs.

Monitoring & Analytics

Monitor the execution of your tasks along with analytics to understand how your interactions and models perform.

Tests

Craft tests to validate interactions, ensuring ongoing consistency and adhering to enterprise standards.

Runs

Dive deep into LLM results, track iterative changes, and decipher variations to fine-tune LLM interactions.

Analytics

Stay atop LLM performance metrics. Monitor quality, latency, and overall system health for proactive management.

Content & Workflow

An intelligent content store to pre-process content for retrieval-augmented generation (RAG) and a workflow engine for orchestrating durable generative AI processes.

API & Integration

Enterprise teams can integrate LLM-powered tasks into existing applications or create brand new applications and services with multiple integration options. Expose interaction definitions as robust API endpoints, ensure top-notch schema validation, and minimize call latency.

REST API

The Composable API is a RESTful API to create, manage, and execute interactions.

OpenAPI/Swagger

The OpenAPI/Swagger spec describes the API for executing interactions and accessing runs.

JavaScript SDK

The JavaScript SDK library provides an easy way to integrate with applications using JavaScript.

CLI

The command line interface (CLI) can be used to access your projects from a terminal.

Commonly Asked Questions

What does API-first mean?

Everything you can do in the UI you can do through the API.

Where can Vertesia run?

Vertesia can run as SaaS or can be deployed on any cloud infrastructure.

Can Vertesia be used with custom models?

Yes, custom models can be accessed through any supported inference providers.

Is Vertesia an LLM application development framework?

No, Vertesia is an end-to-end platform that offers production-ready LLM services, a content engine, and agentic orchestration.