Vertesia's GenAI Solutions

From information extraction to content summarization, code assistance to co-piloting, the use cases are endless… well, at least in the thousands.

This page provides an overview of LLM-powered solutions to help you get started with LLMs and Vertesia, but this is not an exhaustive list!

LLM Solution Categories

LLM solutions can be categorized into three main categories: Knowledge Querying, Content Analysis & Reasoning, and Generating or Repurposing Content. These categories can also be combined to create more complex use cases.

Using LLMs to create original content, repurpose existing content, or turn unstructured content into a more structured, personalized format.

Examples:

- Documentation Generation & Maintenance

- Code Generation for Tooling

- Content Summarization for Marketing

- Personalized Customer Communication

- Training and Educational Materials Creation

Knowledge Querying

Most enterprise information is unstructured — like contracts, memos, and emails — making it difficult to access and utilize effectively. This limits decision-making and extends processing times.

Traditionally, extracting value from unstructured content required manual schema creation and metadata extraction, followed by further interpretation. This process is time-consuming and inefficient.

Opportunities

LLMs allow organizations to interact with information based on its semantic meaning, bypassing the need for manual metadata.

For example, an LLM can identify and categorize problematic clauses in contracts based on contract size. This capability extends to various business areas, such as call centers, invoices, and emails, unlocking valuable insights from unstructured content and making them accessible through existing systems or chat interfaces.

Platform Approach

With an LLM software platform like Vertesia, unstructured information is semantically typed, structured, and enriched with business-specific knowledge, automatically.

Vertesia chunks content into semantic parts, enabling tiered analysis and similarity search across complex use cases. This approach simplifies the conversion of unstructured data into actionable insights, solving business problems that were previously unmanageable.

Key Benefits

- Significant time and cost savings

- Extraction of valuable insights from existing information

- Enhanced decision-making and faster processes

- Improved risk management and automated knowledge creation

- Unlocking hidden value from organizational data

Content Analysis & Reasoning

Information analysis in organizations is often time-consuming, inconsistent, and costly due to the reliance on skilled analysts. While AI can’t fully replace human analysts, LLMs can accelerate analysis, improve consistency, and scale operations, allowing analysts to focus on critical tasks that require their expertise.

Traditional enterprise analysis depends on structured data, requiring extensive preprocessing to fit predefined formats. This inflexibility creates information silos, leading to inconsistent data and redundant efforts when new analyses are needed.

Opportunities

LLMs enable the dynamic analysis of raw information without extensive preprocessing. By using structured rules and guidelines, LLMs ensure consistent analysis across large datasets and allow for rapid iteration and adaptation to new inputs.

Leveraging the prior example, after identifying a problematic clause in a contract, an LLM can analyze its risk level using the company’s legal policy, providing a detailed compliance assessment. High-risk contracts are flagged for legal review, while low-risk ones are processed accordingly, streamlining the overall workflow.

Platform Approach

Leveraging an end-to-end LLM software allows enterprises to quickly create robust analysis tasks on both structured and unstructured information and refine prompts that handle both structured and unstructured data.

Vertesia enables the rapid development and refinement of your analysis framework, automates data preparation and supports large-scale execution with error management, extended processing, and retries.

We also enable multi-step analysis by using interim results from initial tasks as inputs for subsequent processes, ensuring thorough content evaluation.

Key Benefits

- Efficient use of skilled analysts

- Scalable, standardized analysis

- Flexibility to adapt to new business needs

- Cost-effective analysis of previously resource-intensive areas

Generating or Repurposing Content

With 90% of the world’s content created in the last two years, the pace of content production is accelerating. Enterprises rely on content to communicate, and much of this content builds on existing materials like meeting notes, emails, and documents. Effective content creation from diverse inputs is essential for empowering employees and engaging customers.

Content creation requires diverse inputs (e.g. ideas, documents, emails) and a clear output structure (e.g., white papers, briefs). Enterprises often struggle to process these inputs efficiently and produce high-quality content across various formats. Without a well-defined output format, content creation can suffer in speed, quality, and consistency, making the process time-consuming and challenging.

Opportunities

LLMs can generate initial drafts quickly, allowing humans to refine and edit as needed. Often, the draft from an LLM is sufficient, streamlining the content creation process. For example, after identifying and analyzing problematic clauses in contracts, an LLM can generate a comprehensive report by integrating source content and insights, ensuring a cohesive and informed output

Platform Approach

Generating short-form content with LLMs is often straightforward, but creating complex long-form content that integrates multiple inputs is more challenging.

Vertesia addresses these challenges by offering tools that streamline the most complex short and long-form content creation.

Enterprises can easily integrate their inputs, which are optimized for LLMs, specify the output format, and leverage Vertesia's workflow engine and content generation algorithms to produce high-quality content while overcoming LLM token limits.

Key Benefits

- Quickly generate scalable content for publishing or refinement

- Integrate multiple sources for content creation

- Standardize and control input/output formats

- Overcome LLM response token limits

How to Evaluate, Select, and Implement LLM Use Cases

This comprehensive how-to guide explores the strategies, tactics, and best practices for selecting and deploying LLM use cases. It explores eight real-world use cases and explains the potential impact of LLM-powered tasks on business operations, efficiency, and innovation.

Contract Liabilities Monitoring

Liabilities are not always formalized or captured in a way that makes it easy for operations to take them into account. Forgetting them may result in significant penalties.

With Vertesia, contracts are chunked into clauses. Both clauses and the main contracts have embeddings created and contextual metadata generated. The platform regularly monitors a set of risks based on a guideline created by the business. Using semantic RAG, the platform automatically queries the specific contracts using structured identifiers generated by the LLM.

The system is able to identify relevant clauses (e.g. Indemnification) using vector search for the subset of contracts using the correct policy rules, and verifies that the indemnification range is within what has been accepted by the guideline and decides to flag the contract for review if issues are detected.

This results in:

- Better management of liabilities, with less breaches and crises

- Significant reduction in risk

- Improved brand image

Code Generation for Tooling

The IT department always needs to perform a lot of administrative actions on both digital and physical assets, such as software, personal computers, servers, storage systems, network elements, and more.

Automating IT tasks is critical and increases the need for writing automation scripts, for instance in PowerShell. These may be complex, long, too numerous or any combination of these factors. Scripts must also be updated regularly, resulting in increased IT workloads.

With Vertesia, users can Automate PowerShell script generation to express the requirement in a functional way, get corresponding scripts generated, and then select the most suitable LLM for the task.

This results in:

- Decreased IT staff workload

- Increased IT productivity

- Better service to employees & stakeholders with more personalized service

Problematic Clause Identification

An organization has identified a problematic clause in customer contracts that puts the company at risk. They now need to identify all related legacy contracts to properly anticipate associated risk. However, finding all occurrences of such a clause in legacy contracts is difficult, since the clause has been slightly modified and translated in several languages.

With Vertesia, the company ingests contracts after having turned on chunking. Similarity is automatically calculated for all clauses (of chunked contracts). The user selects the problematic clause in a given contract, then looks at the “Similar” tab to see similar clauses, and their parent contract, and can filter by region/segment/product, and get the acceptable ranges for each based on actual history.

This results in:

- Significant time and energy savings

- Improve risk management

- Potential automation of risk management (i.e. automated detection of new occurrence of a problematic clause)

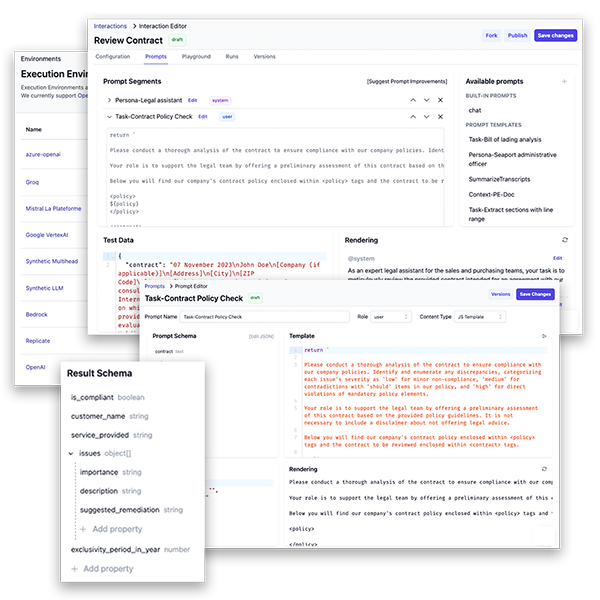

Complex Document Review

Organizations need to review documents and check compliance with policies, standards and regulatory frameworks, whether those documents are internal or come from a supplier. Many teams are overloaded with reviewing activities. And most of them wouldn’t consider re-validating the compliance of hundreds or thousands of legacy contracts with the company’s latest terms and conditions due to the tedious nature of the work.

Vertesia's Approach

- Submit any kind of document to be reviewed (e.g. contract, project plan, product specification) as well as a specific set of rules (e.g. policy, standard, guidelines).

- Ask for a review returning both structured data, such as: “is compliant?”, “non-compliance issues”; and unstructured data, such as a “remediation plan” for each issue.

Key Benefits

- Easily process large volumes of documents that would not be processed at all otherwise

- Perform objective reviews

- Improve employee productivity by pointing collaborators directly to the parts of the document that need attention

- Detect and reduce risks

Earnings Call Transcripts Analysis

Publicly traded companies must communicate their financial results on a regular basis, typically quarterly. This is an Earnings Call, which results in an Earnings Call Transcript. Financial analysts spend hundreds of hours reading and summarizing these transcripts for sometimes thousands of companies. This is a massive investment of time which prevents the analysts from focusing on more valuable activities.

Vertesia's Approach

- Auto-typing (e.g. transcript, financial data sheet), metadata extraction (e.g. company, fiscal year, quarter, sentiment)

- Quarterly transcript summary generation with extraction of main topics and key financial metrics

- Annual synthesis generation based on the quarterly summaries

- Year-over-year (YoY) analysis generation, with identification of dynamics (trends YTD/QoQ/YoY in revenues, operational margin, customer churn, etc.)

Key Benefits

- Instant processing, homogeneity of produced results and more sophisticated analysis

- Content analysis and subsequent content generation are efficiently articulated to constitute a full process

- Time savings can be spent on value added tasks

Why use Vertesia for your LLM-powered tasks?

While LLM application development frameworks offer tools for building applications, Vertesia is an end-to-end platform with comprehensive capabilities and support for the entire enterprise LLM lifecycle. From ideation and experimentation to prompt design, task configuration, testing, monitoring, and optimization. We help prevent vendor and model lock-in by avoiding hard-coding prompts and LLMs and provides the flexibility to easily switch between inference providers and models.

Vertesia has the architecture to support multiple teams, use cases, LLMs, inference providers, and more - all within one UI. Governance, security, orchestration, virtualization, fine-tuning, workflows, semantic RAG, multiple integration and deployment options, collaboration, analytics, and monitoring are are built into the product.

Expanded GenAI use case and user reach

Customers can explore multiple use cases in parallel, reducing the need to serialize each use case individually, while we provide a seamless UI and UX.

Comprehensive data privacy and security compliance, auditing, and data controls

Vertesia's guard rails, data controls, and auditing capabilities ensure our customers can limit and manage how and where their data is being used, maintain adequate access control, and perform version control.

Embedded life-cycle monitoring and management capabilities

Vertesia gives organizations vital visibility into their LLM-powered tasks, offering real-time performance insights, recommended optimizations, and the ability to implement updates remotely.

Robust content services and workflow engine

Vertesia unifies and optimizes the relationship between structured and unstructured data sources and LLMs across various documents, chatbots, and emerging assistant/agent use cases. We provide capabilities to help with indexing/vectorization, retrieval-augmented generation (RAG), and usage.

Seamless incorporation of new inference providers and models

Vertesia customers easily leverage new inference providers and LLMs without considerable refactoring, retraining, or reconfiguration. Our customers can adapt to new and emerging capabilities and models in a lightweight, often zero-touch way.

Improved solution accuracy and coherence across varying models and providers

Vertesia customers streamline the creation of their training data sets, compare model performance, reuse prompts across models, create complex orchestration pipelines, seamlessly transition to new foundation models, and monitor solution performance to help with cost and access controls.

With Vertesia, the possibilities are endless.

The examples above illustrate the transformative impact of LLM-powered tasks on various enterprise functions. By leveraging Vertesia, organizations can automate complex processes, enhance decision-making, and deliver personalized experiences, ultimately driving efficiency and innovation in their business solutions.